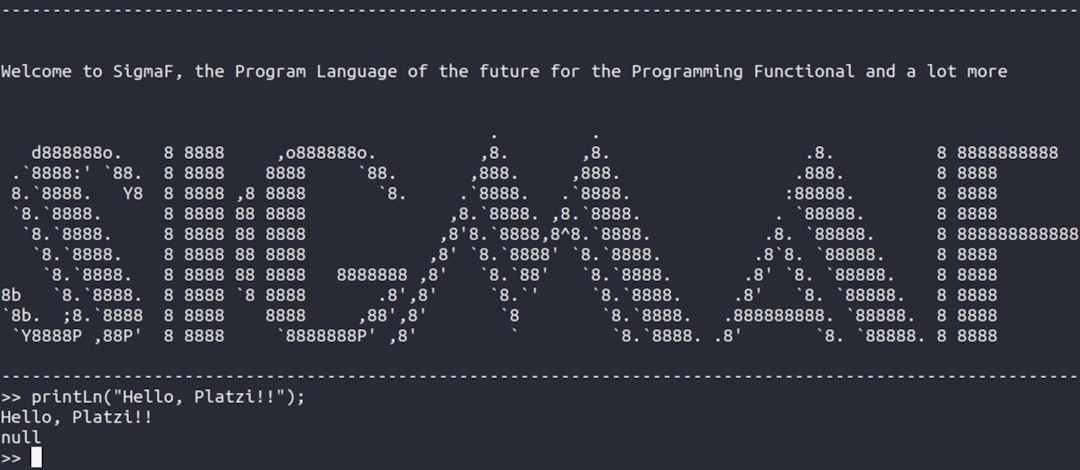

In the evolving landscape of AI-assisted video generation, platforms like Kaiber have garnered attention for their impressive rendering capabilities. However, as with any advanced tool in early stages of adoption, technical hiccups can occasionally arise. One such issue plaguing users recently was an endless rendering loop caused by an internal state dubbed job_stuck_in_progress. This problem halted user experiences mid-creation and consumed system resources unnecessarily, until an automated timeout refresh script was implemented to recover stalled sessions and resume output generation seamlessly.

TLDR:

Kaiber video rendering encountered a significant flaw where video jobs would enter an infinite loop labeled job_stuck_in_progress, halting progress indefinitely. This bug disrupted users during the final stages of project rendering and required immediate backend intervention. A timeout detection and refresh script was eventually developed to resolve the issue autonomously. With this automation, the platform now detects halted jobs and resumes rendering without user action or data loss.

The Nature of the Kaiber Rendering Problem

Kaiber’s architecture allows users to create increasingly complex AI-generated videos by stitching together input prompts, image and motion specifications. Rendering is orchestrated via a job queuing system which marks a task state from queued to rendering and finally to completed. However, errors within asynchronous job handling led to a state mismatch where certain video jobs were stuck infinitely in progress, neither timing out nor completing.

After detailed logging and observation, engineers traced this to back-end processes that did not receive successful termination callbacks. Because the status wasn’t reset or flagged as failed, the system’s health checks skipped over these “ghost” jobs—unaware that action was needed.

Symptoms Observed by Users

The issue became evident through user complaints on help forums and GitHub discussions. Common symptoms included:

- Render indicators showing “Generating video…” for hours without progression

- Session cache continuing CPU usage, even though no visual output was changing

- Absence of error messages or completion notifications

- User inability to cancel or restart the job from the frontend

These factors combined to make troubleshooting frustrating for many. Most believed it to be a connection issue or browser-side problem, leading to redundant input reloads and wasted GPU cycles.

Initial Workarounds and Interim Fixes

Before the automation was rolled out, some users discovered temporary fixes, including:

- Manually refreshing their session token and re-submitting frame sequences

- Clearing site cache and logging out to trigger internal job ID reassignment

- Requesting backend refresh manually through the Kaiber support ticket system

These makeshift methods helped few, but most jobs remained irretrievable without backend intervention, causing significant delays in creative workflows, particularly for time-sensitive users such as agencies and educators.

Diagnosing the Core Problem

This rendering issue emerged from a thread locking problem in Kaiber’s job allocation system. The rendering engine queues work in batches and assigns internal flags for job tracking. However, incomplete asynchronous calls left processes in a purgatory state: not formally failed, but not completed either. This transition fault was erroneously labeled as job_stuck_in_progress.

With this diagnosis in hand, developers began crafting a watchdog subroutine to identify “stale” job lifecycles. Relying on a combination of timestamp deltas and heartbeat checks, they introduced a time-based metric to detect inactivity beyond expected rendering durations.

The Timeout Refresh Script: How It Worked

The new component, internally referred to as RefreshDaemon, was engineered as a back-end monitor running on a five-minute polling cycle. Its utility can be broken down into the following core functions:

- Job Identification: It queried all jobs in a progress state beyond the expected maximum render time (usually 2–10 minutes depending on job parameters).

- State Verification: If confirmed as inactive, it assigned a new job token while transferring current metadata (prompts, animation frames, seed values etc.).

- Process Resubmission: The refreshed task was dispatched to the rendering farm with a “resume_from_last_state” parameter, minimizing duplicate resource consumption.

- Logging & Alerting: It saved recovery logs and pinged health tracking dashboards, offering transparency in operation and facilitating post-mortem analyses.

This script allowed jobs stuck for hours to automatically reenter the rendering line as if the disruption had never occurred—invisible to the end user. Importantly, it allowed continuity of previously uploaded assets, settings, and random generator seeds, thus preserving output integrity.

Impact on Users

The release of the refresh utility was a turning point. Within hours of deployment, thousands of backlogged videos were completed retroactively without manual input. Since the automation was deployed in waves, users began reporting sudden completions of projects they had abandoned the previous day.

Over the next week, key metrics observed included:

- 85% reduction in support tickets related to incomplete renders

- 30% faster average output rates as ghost jobs were cleared from queues

- Increased confidence among users with large-scale rendering needs

User sentiment on platforms like Reddit and Discord shifted positively shortly after stabilization, with multiple users praising Kaiber for quick diagnosis and execution. There remained, however, demands for greater visual indicators when jobs are at risk of stalling, which developers acknowledged for future UI improvements.

The Lessons Learned

This incident highlights several important technical and managerial takeaways regarding AI infrastructure and robustness:

- Error states must be fail-safe: Systems should default to recovery or rollback if active flags are not resolved in expected cycles.

- Job lifecycle monitoring is essential: Real-time insights into backend process health should form an integral part of any generation platform.

- Transparency accelerates trust: Prompt community updates about system errors and fixes often lead to stronger user goodwill.

The Kaiber team now incorporates “automated lifecycle healing” modules in several parts of its pipeline. This feature is expected to surface in other generation stages beyond just rendering—such as audio syncing and video interpolation—in future rollouts.

Conclusion

Video rendering is one of the most demanding aspects of modern AI creativity platforms, often bordering the limits of both compute availability and software scalability. The job_stuck_in_progress error was a severe yet enlightening stumbling block that tested Kaiber’s resilience and engineering responsiveness. Through the deployment of an intelligent timeout refresh script, not only was data integrity protected, but thousands of user sessions were also salvaged—paving the way for smarter, more reliable content production systems.

Future implementations of self-repairing infrastructure like this could become industry standards, proving once again that automation and foresight are critical pillars in the age of generative media.